It was recently in my best interest to learn how to make use of the PROXY protocol in support of red team infrastructure. If you’re familiar with developing scalable systems or using load balancers then you may be well aware of the PROXY protocol already. But if you’re not familiar with it, the basic concept is that your load balancer prefixes the original request with a PROXY line, like so:

PROXY TCP4 192.168.0.1 192.168.0.11 56324 443\r\n

GET / HTTP/1.1\r\n

Host: 192.168.0.11\r\n

\r\n

We can break this down into each of it’s parts:

PROXYindicates that it is version 1 of the PROXY protocolTCP4indicates the proxied protocol. TCP4 means TCP over IPv4, TCP6 means TCP over IPv6.192.168.0.1indicates the source address. This is the originating client address.192.168.0.11indicates the destination address.56324indicates the source port443indicates the destination port\r\nrepresents the end of the PROXY headerGET / HTTP/1.1\r\nis the start of the HTTP request

In typical HTTP proxying situations, your load balancer might insert an X-Forwarded-For header that contains the source IP address. If you want to retain the source IP address using HTTPS, you would need to let your load balancer decrypt the TLS connection so that it can insert the header. Then maybe it re-encrypts it to the backend using a separate TLS connection. Fine and dandy for most use cases, honestly.

But in offensive infrastructure, we don’t really use the term “load balancer” and instead we use “redirectors”. These redirectors are meant to protect our infrastructure from being burned, with the understanding that the redirectors are probably easier to tear down and spin up than the rest of the infrastructure. If you want to replicate sketchier threats, you will probably want to use redirectors on various services that aren’t major cloud providers. This is colloquially referred to as the made up country of sketchyasfuckistan, coined (I think?) by my friends Topher and Mike.

If you’re using sketchy cloud providers, you probably don’t want to deal with moving SSL certificates around when the redirector gets burned, and furthermore you probably don’t want to trust that keys generated on that box are kept solely on that box, or that you’re the only one on that box. Generally you want to trust it as little as possible. This can be achieved by using dumb TCP redirectors, several of which are documented on the preeminent source of red team infrastructure design by [bluscreenofjeff]. But dumb TCP redirectors can lose information about the originating IP address, which can make it difficult to make decisions once the traffic gets back to your trusted infrastructure.

Enter the PROXY protocol. This bad boy lets your TLS redirectors stay dumb without losing information about the client connection. As long as your redirector and your destination server supports PROXY, then you get to maintain the originating client address. The redirector receives a TLS connection from the client, prepends a header to the TCP packet(s) and then passes it along to the upstream server. The upstream server (the one that has the TLS keys) has to also support PROXY protocol, otherwise you’ll wind up with errors.

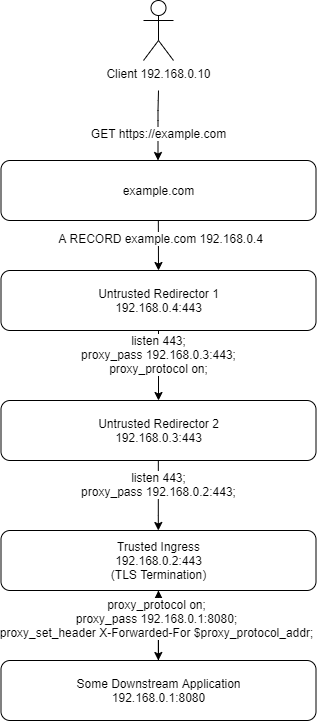

Diagram

Steps To Reproduce

Okay I’ve done enough explaining probably, right? You probably just want to see the configs. Let’s jump into that. I’m going to label the config blocks based on the above diagram. This configuration was tested using nginx version: nginx/1.14.0 (Ubuntu) as it ships out of the box with Ubuntu 18.04.4 LTS. It was last tested on 2020-02-15.

example.com

Point your intended DNS record to the IP address of Untrusted Redirector 1. In the example, this is 192.168.0.4.

@ 300 IN A 192.168.0.4

Untrusted Redirector 1

This is our ingress redirector. The IOC that the blue team will see when malware reaches out to it. We don’t want it to have any key material on it and want it to be as easy as possible to respin if needed. All it does is listen on 443 and point to Untrusted Redirector 2.

Create a file called /etc/nginx/stream_redirect.conf

stream {

server {

listen 443;

proxy_pass 192.168.0.3:443;

proxy_protocol on;

}

}

Then you can add this line to your /etc/nginx/nginx.conf. Ensure that this is NOT inside any other blocks.

include /etc/nginx/stream_redirect.conf

Restart nginx and then move on to Untrusted Redirector 2. sudo systemctl restart nginx.

Untrusted Redirector 2

This is our mid point redirector. This is not strictly necessary and you could instead point Untrusted Redirector 1 directly to Trusted Ingress. It can be useful, though, if you want to disassociate the example.com indicator from your actual infrastructure. This is useful if your infrastructure is hosted inside the target environment, which is not uncommon for internal red teams. It keeps all the secrets inside the house.

Create a file called /etc/nginx/stream_redirect.conf

stream {

server {

listen 443;

proxy_pass 192.168.0.2:443;

}

}

Then you can add include /etc/nginx/stream_redirect.conf to your /etc/nginx/nginx.conf just like the previous redirector. Alternatively, instead of using nginx at this layer, you could use a dumber redirector like socat or simpleproxy. This layer does NOT need to be proxy aware, and in fact it likely will not work if you try to use proxy_protocol on on any midpoint.

Restart nginx and then move on to Trusted Ingress. sudo systemctl restart nginx.

Trusted Ingress

This is our ingress system where TLS is finally terminated and we’re in a trusted environment. The key material for example.com resides on this system, and it’s where PROXY will also be terminated.

Example /etc/nginx/sites-enabled/example.com.conf file:

server {

server_name example.com;

location / {

proxy_pass 192.168.0.1:8080;

proxy_set_header X-Forwarded-For $proxy_protocol_addr;

}

listen [::]:443 ssl ipv6only=on proxy_protocol; # managed by Certbot

listen 443 ssl proxy_protocol; # managed by Certbot

ssl_certificate /etc/letsencrypt/live/example.com/fullchain.pem; # managed by Certbot

ssl_certificate_key /etc/letsencrypt/live/example.com/privkey.pem; # managed by Certbot

include /etc/letsencrypt/options-ssl-nginx.conf; # managed by Certbot

ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem; # managed by Certbot

}

Notice the $proxy_protocol_addr variable that we use in X-Forwarded-For. This is the originating IP address from the PROXY protocol. You may wish to also log this in the Trusted Ingress nginx logs.

Example snippet of /etc/nginx/nginx.conf:

[...]

http {

[...]

##

# Logging Settings

##

log_format proxy '$proxy_protocol_addr - $remote_user [$time_local] ' '"$request" $status $body_bytes_sent "$http_referer" ' '"$http_user_agent"';

access_log /var/log/nginx/access.log proxy;

error_log /var/log/nginx/error.log;

[...]

}

You may also wish to only use the proxy format for the specific site configuration on Trusted Ingress, so you could leave the default access_log line in /etc/nginx/nginx.conf and instead add access_log /var/log/nginx/example.com.access.log proxy; to the /etc/nginx/sites-enabled/example.com.conf server block(s).

Downstream Application

If you’re following this example exactly, then the downstream application needs to be aware of the X-Forwarded-For header in order to take advantage of it. You can do client IP filtering at the nginx layer to redirect unwanted IPs to a different place, so it’s not entirely necessary for the downstream application to even care about the IP address.

There’s also a way around this restriction so that the downstream application doesn’t require any knowledge of the X-Forwarded-For header, but it requires an nginx module (ngx_http_realip_module) that doesn’t ship with Ubuntu nginx packages. It’s also not available as a separate installable module, and I’d rather not include a whole section in this blog post about building nginx from source. Perhaps a topic for another post.

Conclusion

I don’t know if there is a different way that red teamers have solved this problem, but this is a neat solution because it doesn’t require any middle servers to have key material or be trusted. Technically they have some minor trust to not send the wrong client IP address, but it doesn’t introduce any additional problems versus just having the dumb redirector in the middle, since you’d only be getting the redirector IP and be unable to make any decisions based on that anyways. So anyways, go forth and PROXY on.

As usual…