My friend sent me a tunnel link where he had to manually set it up with socat and his nginx ingress controller in his k8s cluster. He made an offhand comment about needing a better way to setup tunnels and it got me thinking. This post is the result of a long night of hacking away at my own tunnel solution.

If you want to jump into the nitty gritty of how it works, you can skip to Roll Your Own Tunnel. If you want to skip the nitty gritty and figure it out yourself, Putting It All Together is where you want to be.

Criteria

I had a handful of criteria that I wanted to consider for a functional solution.

- I wanted it to run on my own infrastructure - I was likely going to be doing TLS termination at the public web server, since my development servers aren’t going to be using publicly trusted TLS certificates.

- I wanted the tunnels to be created under my own domain. I chose

dev.0xda.deto be the parent of the tunnels – any new tunnel should be available underneath.dev.0xda.de. - I didn’t want to leak the links to new tunnels automatically and invite automated scanning – dev services are often running in debug or development mode, which may leak sensitive information even in simple cases like a 404 response. This is an important distinction for one particular solution I looked at, which we’ll cover in the next section.

With these criteria in mind, I looked at existing solutions. Unfortunately while looking for solutions I could potentially self host, I mostly was reading awesome-selfhosted which didn’t seem to have a good section on tunnels. It turns out there’s a whole separate list called awesome-tunneling, which has a handful of criteria that are somewhat similar to mine. But I didn’t know about this list until after I spent my whole night working on this, so I won’t really be touching on any items from that list.

Solutions I Considered

Ngrok

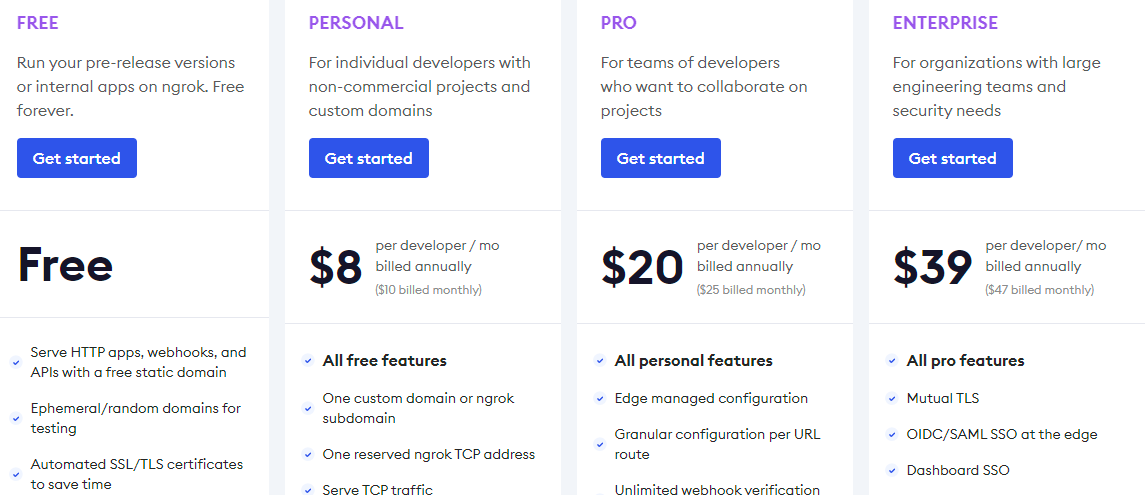

I have been using ngrok off and on for years. It is okay – it’s convenient to spin up tunnels quickly, and even the free tier allows you to reserve one static domain that you can reuse. This makes testing development of flows like OAuth2 login easier, especially on providers that restrict the redirect URI to publicly routable domains.

To get a custom domain, you have to use the $10/mo or $96/yr “Personal” plan. This is fairly reasonable, if I’m being honest. But it doesn’t meet my first requirement – Ngrok controls the TLS termination before forwarding the request to my service. Ngrok can potentially see whatever I’m developing, can potentially see secrets that leak in debug pages, etc. I have no reason to really worry about this, but in the pursuit of my ideals, I moved on to another option.

Tailscale Funnel

I love Tailscale. I’ve been a free tier user for over a year now, setting it up is incredibly easy, and I can use the Wireguard mesh network to easily connect my phone to my home services while I’m on the go. On top of this, I can even use Tailscale to restrict my administrative ports on web servers, such that you have to either connect through my tailnet or connect through my bastion host.

Tailscale offers a feature called Funnel, which works similarly to ngrok as a way to route internet traffic into your local service. It doesn’t meet the self-hosted criteria or hosting on my own domain, but I was already using Tailscale so it was easy to test out.

One of the cool things about Tailscale Funnel is that it handles the TLS termination on your device – this is an improvement over ngrok’s basic proxy service, since I know that the only people who can see the traffic are my local service and the client requesting it. But this comes with an important caveat that I was surprised to learn about.

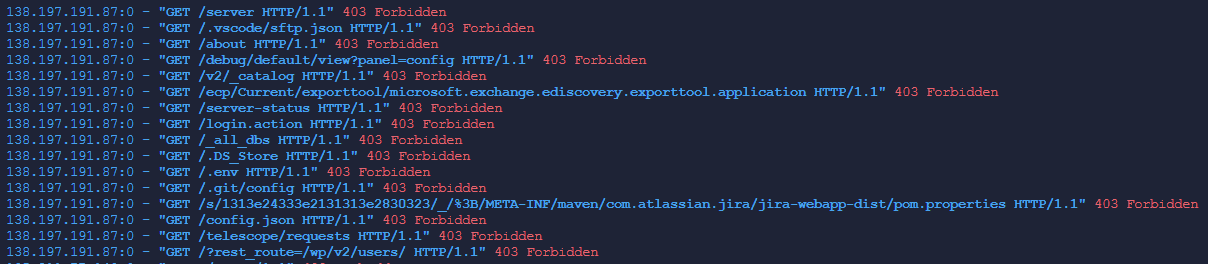

Within minutes of running my tailscale funnel, my Django service I was running for testing was being bombarded by internet scanning noise. This was surprising to me, but in hindsight it makes perfect sense.

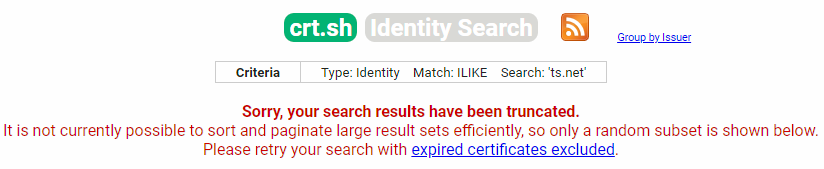

Without too much detail about the Tailscale product, you have a “tailnet” address of <unique-tailnet>.ts.net. For simplicity sake, let’s say my tailnet is named ellingson-mineral.ts.net. This address can be used as the parent domain of all devices on your tailnet, so you can access the Gibson by visiting gibson.ellingson-mineral.ts.net from any device connected to your tailnet.

Remember how I said that Tailscale funnel handles TLS termination on your device? Well in order to do that, your device has to control the keys for the certificate. And since you want it to be publicly available, you need a certificate that is trusted. So Tailscale fetches a certificate from LetsEncrypt for your host.

So the first time you set up a Tailscale funnel, your tailnet address shows up in Certificate Transparency Logs (CTL). A LOT of tailnet addresses are in CTL.

This could explain how my service started getting scanned minutes after being made available online.

While this service is really easy to use and really powerful for exposing services indirectly, I think I’m not a huge fan of it being immediately available and scannable online, especially if it’s a development service. It also doesn’t allow me to create tunnels with specific names – the tunnel will always just be gibson.ellingson-mineral.ts.net.

VS Code Developer Tunnels

I already use VS Code for most of my development, and they released a public preview of Dev Tunnels back in November 2023. This allows easily hooking up a development tunnel to a port on your local computer, allows persistent URLs for as long as you need, and it’s secure by default – the tunnels default to only being accessible to your own Microsoft or Github account. This is especially cool if you want to test things across your own devices, and it does appear to offer more granular access controls if you so desire them.

Either way, this service is cool but it relies on the generosity of Microsoft, tunnels my traffic through their relays, and I can’t really control the domain I want to use.

I didn’t spend a lot of time testing this, I’ve used it before and it’s okay. But I really just like the idea of being able to send someone a link on my own domain.

Roll Your Own Tunnel

So I was settling into rolling my own ngrok-like service. I spent maybe half an hour thinking about doing it with a simple HTTP application built with FastAPI that would broker a tunnel between clients and the server. I could front the API with nginx and write a simple client. But as I started considering proxying all traffic through websockets, I realized I didn’t need to write any of my own code to make this work.

While looking around, I found a post by Vallard called “my own ngrok”. This uses a simple nginx configuration and an ssh reverse proxy. These are both things I’ve used a great deal in the past. In Vallard’s post, he uses a single reusable hostname, and a single port. This works great for simplicity, and for most people this is probably more than enough. But I thought I could make it just a little bit better.

Wildcard Domains

First I wanted to tackle the ability to have arbitrary subdomains for tunnels. Some years ago, I figured out that you can do wildcard server_name blocks in nginx while I was trying to bypass the Twitter ban on sharing links to ddosecrets.com.

To make this work, we have to do a couple things.

- Create a wildcard A record in your DNS zone. E.g.

*.dev 10800 IN A 51.81.64.16routes all subdomains of*.dev.0xda.deto the host that is serving this website. - Create a wildcard TLS certificate with Lets Encrypt – you’ll want to make sure this can auto-renew, which will require some extra work on your part. We’re not going to cover the auto-renewal in this post.

- Create the following nginx config. This is loosely modified from Vallard’s post referenced above, to account for my domain name and my wildcard server_name.

Now, we can have arbitrary tunnel names without leaking them to cert transparency logs.

Enabling Multiple Simultaneous Tunnels

One major drawback with the configuration so far is that we can only setup one tunnel – our SSH reverse tunnel binds on port 7070, and our nginx config is routing all traffic to that port.

To fix this, we’re going to use a feature of ssh reverse tunnels that is probably a little bit lesser known. See, of course you can proxy to TCP ports like 7070. But you can also establish reverse proxies to a unix domain socket, which is just a file on disk.

This gives us a pretty cool capability to define a name for our tunnel on the client and have that name be available on the server as a unix domain socket.

ssh user@example.com -R /tmp/test.dev.0xda.de.socket:127.0.0.1:8000

The important bit here is the /tmp/test.dev.0xda.de.socket. We define this on the client, and it creates a file at /tmp/test.dev.0xda.de.socket on the server.

We can then combine this with a slight modification to our nginx server block to have nginx send traffic to the /tmp/test.dev.0xda.de.socket socket.

We’re making use of the $host variable in nginx to pass to a named socket on disk. So if our request is to https://test.dev.0xda.de, then nginx will match this server block and then pass the request to /tmp/test.dev.0xda.de.socket.

There’s one glaring problem with this, though.

Linux Permissions

We’re sshing to our server as our own user, but our nginx server workers are running as www-data. When our socket gets created, only our own user can interact with it.

$ ls -la /tmp/test.dev.0xda.de.socket

srw------- 1 dade dade 0 Apr 14 02:04 /tmp/test.dev.0xda.de.socket

We need to let www-data read and write to this socket. There are a handful of things I tried, and the one that is probably the sketchiest is also the one I ended up selecting. But let’s take a look at the options first.

Giving www-data ssh authorized_keys…

… and then having my local tunnel command ssh in as the www-data user. This worked, sorta, but usually if I see www-data running ssh, or anything, really, I get pretty sketched out. And plus, the user’s shell defaults to /usr/sbin/nologin – so the shell immediately terminates after ssh authenticates.

Make a group that my user and www-data belong to

This probably could work, but I was having trouble with it because whenever I tried to chown dade:grok /tmp/test.dev.0xda.de.socket, I was met with a Operation not permitted error. I suspect this is probably something we could overcome, and is better than the alternative options if it does work, because we can enable only our user and www-data to talk to the socket.

chmod o+gw

What I ultimately ended up doing is the dumbest possible solution. We can just run chmod o+rw /tmp/test.dev.0xda.de.socket and allow any user, any process on the server to communicate with this socket. Since ultimately I’m granting the internet read/write access to this socket anyways, I decided I was okay with this for now. If I was in a shared hosting environment with other users, I might reconsider – but in that scenario, I’d also probably not be able to make the nginx config changes necessary, so maybe that scenario is irrelevant.

But I don’t want to have to run this every time I start the tunnel, that’s annoying.

The world’s sketchiest automation

To automatically run a command when we ssh in to establish the tunnel, we have a bunch of options. We could set it up to run a non-interactive ssh session and run a pre-defined bash script, for instance. But for reasons I don’t remember, this didn’t really work well for me. We could also use our various shell rc files to attempt it whenever our shell starts up. But this also isn’t my favorite option, since I could start shells inside of screen or tmux, for instance.

Instead, I specifically only want to run this bit of code when I establish a new ssh session. Enter my old persistence friend ~/.ssh/rc. This is an ssh-specific configuration file that runs when you establish a new ssh connection – both for interactive logins as well as remote commands.

Our naïve solution for this can begin with the following:

chmod o+rw /tmp/*.dev.0xda.de.socket 2>/dev/null

But this runs every time, even when I don’t specify a tunnel. It also blanket runs across all dev sockets in the tmp directory, rather than only running against the specific tunnel we’re establishing with our ssh session.

What we need is to pass the tunnel domain to the server, that way the process on the server knows the exact domain name to use. This will also be helpful when we talk about cleaning up the tunnel afterwards.

In ssh, you can use an option called SendEnv to send an environment variable from the local environment to your remote environment. The sshd server must be configured to support this, and I’m probably about to make some unix grey-beards very angry with my next suggestion.

On my Ubuntu 20.04 server, sshd_config allows certain variables to be sent by default.

That wildcard LC_* is our saving grace here. ANY environment variable that begins withLC_ can be sent from a client to the server, should the client so choose. So let’s abuse that with a new variable called LC_GROKDOMAIN.

So now our ssh command to start the tunnel looks like this:

export LC_GROKDOMAIN=test.dev.0xda.de

ssh user@example.com -o SendEnv=LC_GROKDOMAIN -R /tmp/$LC_GROKDOMAIN.socket:127.0.0.1:8000

And we can update our ~/.ssh/rc file to only run with this new information.

if [ -n "$LC_GROKDOMAIN" ]; then

chmod o+rw /tmp/$LC_GROKDOMAIN.socket 2>/dev/null

echo "You can now visit https://$LC_GROKDOMAIN in your browser"

fi

Cleaning up the socket

Now that we have the automation setup to automatically give nginx the ability to read/write to our socket when we establish our ssh connection, we have one more annoying thing that happens on the server to contend with. Cleaning up.

When we’re done with our socket, we want to remove the file from /tmp/ – otherwise if we try to reuse the socket next time, the forward will fail since the file already exists.

My really hacky way to do this was to add a conditional block to my ~/.bash_logout. This works well enough when I close the session when I’m done with it.

if [ -n "$LC_GROKDOMAIN" ]; then

rm /tmp/$LC_GROKDOMAIN.socket 2>/dev/null

fi

Note: This unfortunately leaves the socket file hanging around in the event that your ssh connection dies through anything other than a clean exit. There is almost certainly room for improvement here, but I was at the end of my knowledge for code that automatically gets executed in my ssh sessions. Failure to clean up the socket will result in the next attempt to establish the tunnel failing until you remove the socket file and re-connect.

Streamlining the Client Experience

Okay so far so good. We can run a single ssh command and have a new tunnel set up on our infrastructure, on our domain, and we haven’t even introduced any new code. We already had nginx, and we already had ssh. We can have as many tunnels as we can have unix domain sockets and nginx will handle routing requests to the appropriate tunnel.

But the command is kind of clunky and not easy to use. We have to export the domain variable, we have to write out a long ssh command, it’s just not fun. Let’s write a wrapper that simplifies things.

Consider our current process for setting up a new tunnel:

export LC_GROKDOMAIN=test.dev.0xda.de

ssh user@example.com -o SendEnv=LC_GROKDOMAIN -R /tmp/$LC_GROKDOMAIN.socket:127.0.0.1:8000

Doing this manually also leaves LC_GROKDOMAIN hanging around in our shell, which might cause some confusion the next time we want to open a tunnel. It’s also just a lot to type out.

We want our interface to be simple. grok <port> [<name>] – port is required, and name is optional. We have to know what port to forward the traffic to, but we don’t necessarily have to designate a name.

A simple bash script later, and we’ve got exactly what we need.

#!/bin/bash

grok() {

TOP_LEVEL_DOMAIN="dev.0xda.de"

if [ -z "$1" ]; then

echo "Usage: grok <port> [<name>]"

return 1

fi

local port="$1"

local name="$2"

if [ -z "$name" ]; then

# Generate a random 12-digit hexadecimal string

name=$(openssl rand -hex 6)

fi

echo "Setting up ${name}.${TOP_LEVEL_DOMAIN}"

export LC_GROKDOMAIN="${name}.${TOP_LEVEL_DOMAIN}"

ssh user@example.com -o ControlPath=none -o SendEnv=LC_GROKDOMAIN -R /tmp/$LC_GROKDOMAIN.socket:127.0.0.1:$port

}

grok $@

I put this in .local/bin/grok, which is in my path, and now I can just run grok 8000 test. I also added in a feature that will generate a random 12 digit hexadecimal tunnel name if we don’t specify one. I also explicitly disable ControlPath in my command – I noticed that our tunnels don’t reliably close if you make use of ControlMaster at all.

Limitations

There are some limitations to this method that I feel are important to address.

- If the socket stays behind on the remote host, our tunnel will fail next time and we have to manually connect in and delete it, then disconnect and reconnect our ssh session. (Technically we can do this with control sequences, but that’s also a topic for a different time)

- Our current implementation keeps the interactive session open the whole time – it would be cool if we could skip the interactive session but keep the session open until we close it. Due to the first limitation, the interactive session is just more useful right now.

- This version of this project can’t handle arbitrary TCP tunneling – the nginx block is in an

httpblock. We can technically setup an arbitrary named TCP tunneling service, but that’s definitely a topic for another day - The HTTP logs for basic web servers can’t distinguish between clients – E.g.

python3 -m http.server 8080results in all requests having a client IP of127.0.0.1. I think this can be handled in the nginx config, but I haven’t looked further into it yet.

Putting It All Together

This should be everything you need to replicate this configuration. Adjust domain names and paths appropriately.

(Remote) /etc/nginx/sites-available/dev.0xda.de.conf

(Remote) ~/.ssh/rc

if [ -n "$LC_GROKDOMAIN" ]; then

chmod o+rw /tmp/$LC_GROKDOMAIN.socket 2>/dev/null

echo "You can now visit https://$LC_GROKDOMAIN in your browser"

fi

(Remote) ~/.bash_logout

# rest of your config here ...

if [ -n "$LC_GROKDOMAIN" ]; then

rm /tmp/$LC_GROKDOMAIN.socket 2>/dev/null

fi

(Local) ~/.local/bin/grok – Make sure ~/.local/bin is in your $PATH

#!/bin/bash

grok() {

TOP_LEVEL_DOMAIN="dev.0xda.de"

if [ -z "$1" ]; then

echo "Usage: grok <port> [<name>]"

return 1

fi

local port="$1"

local name="$2"

if [ -z "$name" ]; then

# Generate a random 12-digit hexadecimal string

name=$(openssl rand -hex 6)

fi

echo "Setting up ${name}.${TOP_LEVEL_DOMAIN}"

export LC_GROKDOMAIN="${name}.${TOP_LEVEL_DOMAIN}"

ssh user@example.com -o ControlPath=none -o SendEnv=LC_GROKDOMAIN -R /tmp/$LC_GROKDOMAIN.socket:127.0.0.1:$port

}

grok $@

Conclusion

I have been using this quite a bit since building it and the quirks definitely make the experience a little less than ideal. But I also don’t have to run any new applications on my server, don’t have new credentials to worry about, and it works well enough that it’s easy for me to quickly send someone a customized link to a development tunnel.

Should you use this? I’m not sure, really. It feels kinda sketchy to pass the Host header directly to the filesystem, and I’m not particularly happy with giving the whole server read/write to the socket file either.

But it was fun to piece these two pieces of technology together in this surprisingly useful way, using knowledge that isn’t particularly arcane but also isn’t particularly common.

Please tweet me with ways you’d improve this. You can also message me on Bluesky (@0xda.de) or Mastodon (@dade@crime.st).

Errata

Improving socket cleanup

HN User mcint recommended using bash’s trap command to cleanup the socket on disconnect. By itself this didn’t work because it automatically removed the socket when .ssh/rc finished running. But in awful, inelegant fashion, I combined it with an infinite sleep loop and was able to get a much more reliable cleanup mechanism.

This seems to clean up no matter what – I can ctrl+c and it’ll clean up. I can kill the ssh connection and it’ll clean up. I can kill the ssh connection through my bastion host, get a broken pipe, and it’ll clean up. It’s a considerably more robust cleanup, and equally as hacky to boot.

if [ -n "$LC_GROKDOMAIN" ]; then

chmod o+rw /tmp/$LC_GROKDOMAIN.socket 2>/dev/null

clean_socket() { rm /tmp/$LC_GROKDOMAIN.socket 2>/dev/null; }

echo "You can now visit https://$LC_GROKDOMAIN in your browser"

trap clean_socket EXIT INT HUP;

while true; do sleep 60; done

fi

You can also change your grok script’s ssh command to cleanup the output with this in mind, as long as you don’t want an interactive ssh session combined with your dev tunnel.

ssh user@example.com -o ControlPath=none -o SendEnv=LC_GROKDOMAIN -T -R /tmp/$LC_GROKDOMAIN.socket:127.0.0.1:$port echo "hello"

This adds -T, which disables the pseudo-tty allocation. It’s designed to run simple commands on a remote host without a full shell environment. Hence, echo "hello" at the end of the command now. But what that command is is irrelevant, because our .ssh/rc is now infinitely looping and will never let that code execute. It has the added benefit of not printing out the MOTD, so your shell output is quieter:

Security of passing user input to the filesystem path

I also had a couple people ask me about whether or not it was safe to use the $host variable in a path to my filesystem. My understanding of this, based on how nginx processes a request, is yes. The TL;DR of it is that $host should be guaranteed to match my server_name regex by the time it reaches the proxy_pass directive, so long as my dev tunnel server block isn’t also the default server block.

Hypothetically, it might be possible to try to access something like Host: ../etc/passwd.dev.0xda.de.socket – however as long as I’m pretty reasonable about not renaming arbitrary important system files with this naming pattern, it’s probably pretty safe. Also I’m fairly sure, but haven’t looked at the source to confirm, that / isn’t valid in a Host header. Attempting to send one to my nginx server results in a 400 bad request instead of a 502 bad gateway, suggesting that it isn’t matching the server block.